Written By: Ross Wohlgemuth

Edited By: Sydney Wyatt

In the plethora of dieting options out there, it can be difficult to find the right one for you; sticking with it and seeing the results weeks or months later can be even more challenging. Whether it’s a traditional low-fat diet (LFD) or something more complex like intermittent fasting, each eating regime has its pros and cons that apply to different types of people with different goals in mind. Often, people choose the wrong diet or fail to stick with it, and do not reach their perceived goals. In worse cases, people may develop or worsen eating disorders by following strict diets. There is also a lot of misinformation about dieting, so it would be a shame if your 2020 resolution centered around a diet that has no scientific backing. Whatever the case may be, finding the right diet and sticking with it should be done with medical supervision.

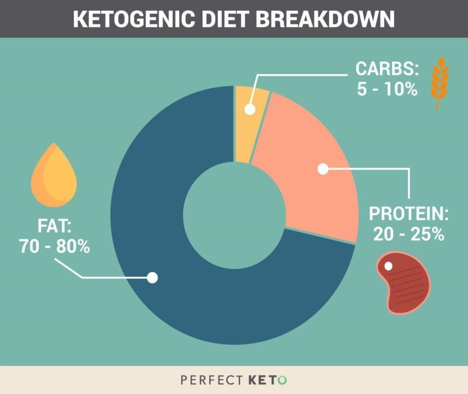

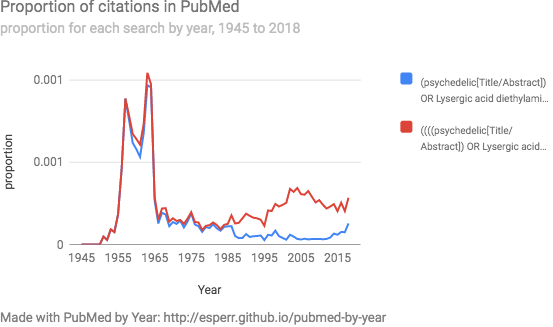

Fig. 1. Sample ketogenic diet macronutrient proportions. This differs from the USDA recommendation by a large amount, especially in the carbohydrate category. Image from [3].

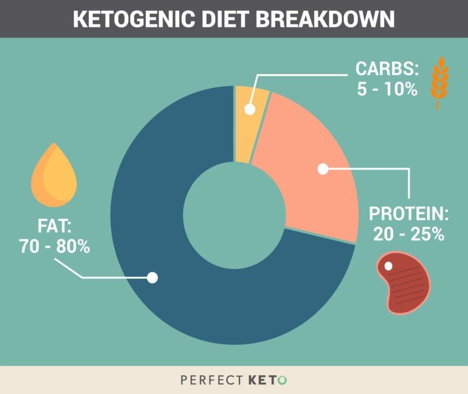

The basis of the KD is that when your carbohydrate intake is low, your body can adapt by producing a new energy source called ketone bodies (KBs) [4]. KBs are made by mitochondria in the liver from fatty acid derived acetyl-CoA, and circulate throughout the body and even cross the blood-brain barrier to provide a source of energy to your cells [4,5] (Figure 2). Thus, even without consuming the recommended amount of carbohydrates, your body can still function just fine by running on fats—for the most part. The jury isn’t out on keto just yet, as some doctors and health organizations are still hesitant to proclaim as a safe way to eat.

For most people, one of the biggest factors in choosing a diet is how effective it is at promoting weight loss. In the case of the KD, science seems to support its weight loss promotion. In a meta-analysis [6] on thirteen studies involving long-term weight loss on a KD or a LFD, it found that five of the parameters tested differed between the KD and LFD groups, one of them being weight loss. They found that the average weight loss of the KD groups was 2 pounds more than that of the LFD groups. The study also found KD groups experienced decreases in blood triglycerides and diastolic blood pressure and increases in both high-density and low-density cholesterol (HDL, LDL).

Fig. 2. Schematic of ketone body (KB) formation. 3-Hydroxybutyrate, a KB, is produced from the oxidation of fatty acids, resulting in the production of acetyl CoA. Acetyl CoA is consequently converted into HMG-CoA and then to acetoacetate which is the precursor to 3-hydroxybutyrate. Figure and caption adapted from [5].

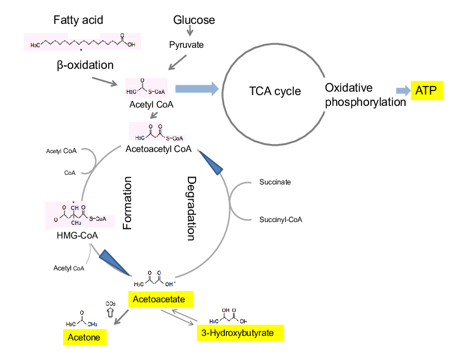

Another factor to consider when choosing a diet is whether it improves your physical fitness or athletic performance. Under the scrutiny of scientific investigation, it seems that the KD may only benefit athleticism in certain cases. In a pilot study of five endurance athletes (four female and one male) between the ages of 49-55, a KD was maintained over 10 weeks of each athlete’s typical training schedule, consisting of 6-12 hours per week of cycling or running [7]. The athletes were tested for body composition and athletic ability at the end of the period, and were also asked to give commentary about the experiences they felt during their time on the diet.

Fig. 3. Weight loss, time to exhaustion (TTE), and ventilatory threshold (VT2) in endurance athletes from [6].

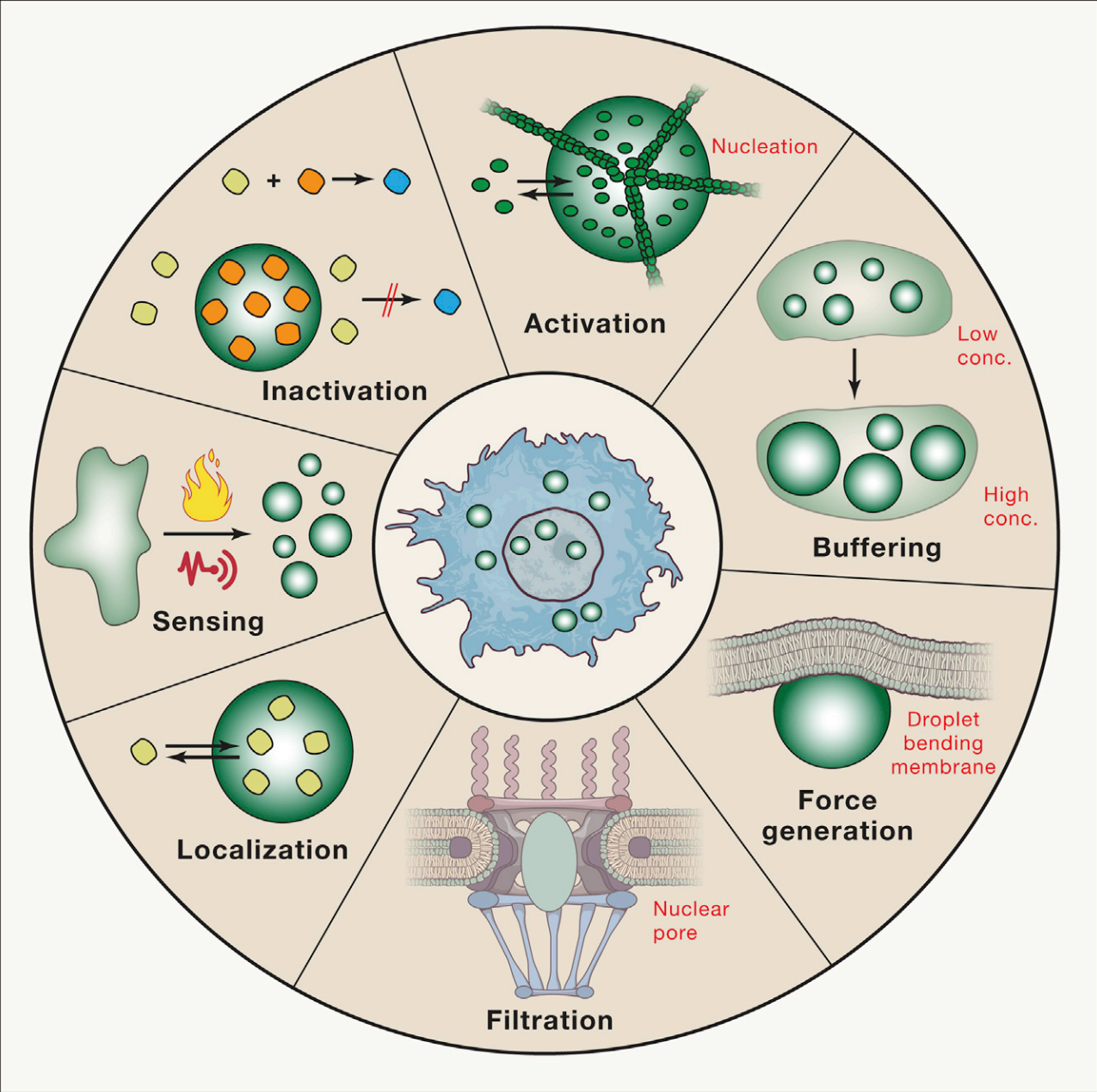

The researchers concluded that although the KD was effective in improving body composition and weight, it was not successful in improving endurance performance. They hypothesized that the lack of athletic improvement was due to lower rates of glycogenic metabolism (metabolism which uses glucose as the primary substrate), which could hinder performance in high intensity exercise. This could be due to lower blood insulin downregulating pyruvate dehydrogenase, an enzyme necessary in linking the glycolytic pathway in the cytosol to the Krebs cycle in the mitochondria [8]. The researchers also thought that liver glycogenolysis (breakdown of glycogen) could be influenced by dietary intake of carbs while gluconeogenesis (the process of making new glucose from lactate, glycerol, and other substrates) remained constant during KD [9]. Whatever the case may be, it seems that using KBs as a metabolic substrate attenuates the athletic ability of the endurance athletes in maximal intensity efforts.

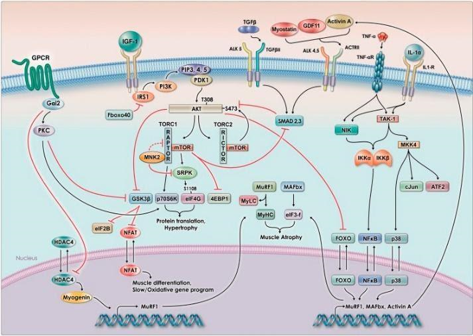

In another study that compared the effects of the KD and traditional western diet (WD) on strength performance in nine elite male gymnasts around the age of 21 [10], researchers found that 30 days of the KD produced better changes in body composition than the WD, but not in strength or performance. Since the athlete’s training regimes were consistent throughout the year, each participant was able to undergo 30 days of the KD and 30 days of the WD in order to pair the two treatments for comparison (a 3 month gap was used between the two dieting periods). The results showed that 30 days of the KD led to an average weight loss of 3.5 lbs and a fat mass reduction of 4.2 lbs. This translates to a change in body fat percentage of 2.6% and an increase in lean mass percentage by a similar degree. Nevertheless, these underlying changes in body composition did not affect the athlete’s muscle mass or their athletic ability. There were no significant changes in either parameters after the KD or WD regimes. The investigators attributed the weight loss to fullness from adequate protein consumption, a greater ratio of fat breakdown to fat synthesis, lowered resting respiratory exchange ratio (meaning the predominant fuel during rest was fat), and elevated metabolism from gluconeogenesis and the thermic effect of proteins [11]. They also stated that increasing muscle mass during the KD is difficult since blood insulin levels are so low, which attenuates the muscle growth pathway via IGF-1, mTOR, and AKT (Figure 4) [12]. Thus, maintaining muscle mass during the KD is a more reasonable and attainable goal than gaining mass. With that in mind, the relevance of the KD to athletic performance becomes more important to athletes whose sport involves weight classes such as boxing. Competitors who desire to maintain muscle mass and strength while trying to lose fat to fit into a lower weight class may reasonably benefit from short term use of the KD. With this in mind, the KD seems like a reasonable option for a specific niche of athletes who may stand to gain (or lose) from periodic keto use.

Fig. 4. The signaling pathway for muscle hypertrophy relies on insulin and IGF-1 signaling, which leads to the increase in phosphorylation of AKT and the activation of mTOR. mTOR activation leads to downstream effects ultimately resulting in muscle hypertrophy (muscle growth). Figure taken and caption adapted from [12].

In the studies on endurance and strength athletes, the net carbs consumed per day were well below the 50g mark, with around 10-35g in the endurance study and 22g in the strength one. These bode well with the body composition and weight loss observed in the short amount of time the KD was followed compared to the long-term studies in the meta-analysis. Even though the meta-analysis showed that the KD had better weight loss effects than the LFD, the amount of weight lost in the 12-month period was unimpressive compared to the short-term studies. This may have to do with the demographics of each population, as the long-term studies were done on older, obese individuals and the short ones done on active people of various ages. This highlights the confounding effect of lifestyle and level of exercise to dietary studies of the KD. It is virtually undeniable that diet and exercise are the cornerstones of weight loss programs, and the combination is more effective than either alone.

The KD presents some promise to those looking to lose weight who are also generally active on a weekly basis. Although it may not be useful in improving athletic performance, it is remarkably beneficial to metabolic health when used safely and correctly. Again, any new diet program you want to try should be discussed thoroughly with a qualified physician or dietician. Given the scientific literature on the KD, I would say KD shows promise for a certain niche of people who are focused on losing weight.

Citations

- Dietary Guidelines for Americans. 2010. USDA, USDHHS.

- Gunnars, Kris. (04 January 2019). 5 Most Common Low-Carb Mistakes (And How to Avoid Them). Healthline.com

- Ketogenic diet breakdown. Perfectketo.com

- Pinckaers, P.J.M., Churchward-Venne, T.A., Bailey, D., van Loon, L.J.C. (2017) Ketone Bodies and Exercise Performance: The Next Magic Bullet or Merely Hype? Sports Med. 47:383-391. doi:10.1007/s40279-016-0577-y

- Watanabe, Shaw & Hirakawa, Azusa & Aoe, Seiichiro & Fukuda, Kazunori & Muneta, Tetsuo. (2016). Basic Ketone Engine and Booster Glucose Engine for Energy Production. Diabetes Research – Open Journal. 2. 14-23. 10.17140/DROJ-2-125.

- Bueno, Nassib Bezerra, et al. “Very-Low-Carbohydrate Ketogenic Diet v. Low-Fat Diet for Long-Term Weight Loss: a Meta-Analysis of Randomised Controlled Trials.” British Journal of Nutrition, vol. 110, no. 7, 2013, pp. 1178–1187., doi:10.1017/S0007114513000548.

- Zinn, C., Wood, M., Williden, M., Chatterton, S., Maunder, E. (2017) Ketogenic diet benefits body composition and well-being but not performance in a pilot case study of New Zealand endurance athletes. Journal of the International Society of Sports Nutrition. 14(22) doi:10.1186/s12970-017-0180-0

- Peters, S.J., LeBlanc, P.J. (2004) Metabolic aspects of low carbohydrate diets and exercise. Nutrition and Metabolism. 1(1):7. doi: 10.1186/1743-7075-1-7.

- Webster, C.C., et. al. (2016) Gluconeogenesis during endurance exercise in cyclists habituated to a long-term low carbohydrate high-fat diet. The Journal of Physiology. 594(15): 4389-4405. doi:10.1113/JP271934.

- Paoli, A., Grimaldi, K., D’Agostino, D., Cenci, L., Moro, T., Bianco, A., Palma, A. (2012) Ketogenic diet does not affect strength performance in elite artistic gymnasts. Journal of the Society of Sports Nutrition. 9(34)

- Paoli A., Canato M., Toniolo L., Bargossi A.M., Neri M., Mediati M., Alesso D., Sanna G., Grimaldi K.A., Fazzari A.L., Bianco A. (2011) The ketogenic diet: an underappreciated therapeutic option? La Clinica Terapeutica. 162:e145–e153.

- Egerman, M.A., Glass, D.J. (2014) Signaling pathways controlling skeletal muscle mass. Crit Rev Biochem Mol Biol. 49(1): 59-68. doi:10.3109/10409238.2013.857291